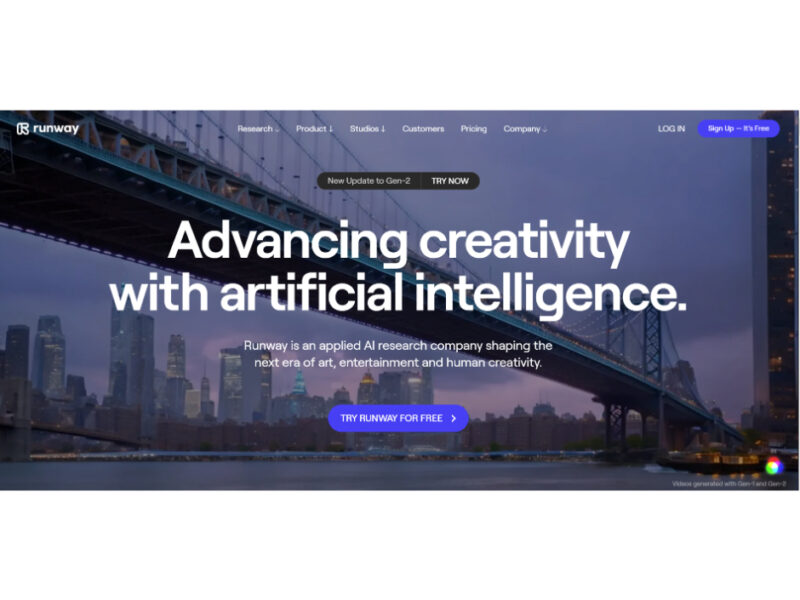

Runwayml

Runwayml AI is an advanced creative toolkit that transforms how artists, designers, and creators work by integrating cutting-edge artificial intelligence technology. It offers an extensive array of tools that make …

About Runwayml

Use Cases

Use Case 1: Rapid Cinematic Prototyping for Film and Advertising

Problem: Traditional film production and high-end video advertising require massive budgets for location scouting, set building, and complex visual effects (VFX). Small studios or marketing teams often lack the resources to create cinematic-quality visuals, leading to a "production gap" between their ideas and the final output.

Solution: Using Gen-4.5, creators can generate high-fidelity, cinematic video sequences from simple text prompts or source images. The model’s state-of-the-art motion quality and prompt adherence allow users to create professional-grade visual assets without a physical camera crew or expensive CGI software.

Example: A marketing agency needs a 15-second "cinematic" shot of a futuristic car driving through a neon-lit rainstorm. Instead of hiring a VFX house, they use Gen-4.5 to generate the footage, specifying the lighting, camera movement, and weather effects to match their brand aesthetic perfectly.

Use Case 2: Interactive Architectural Pre-Visualization

Problem: Architects often struggle to communicate the "feel" of a space using only static 3D renders. Animating these projects manually is time-consuming and requires specialized software expertise, making it difficult for clients to explore a proposed building's environment dynamically.

Solution: Architectural firms (like KPF) can use Runway’s General World Models to animate architectural renderings in-house. By leveraging GWM Worlds, they can create interactive and explorable realities where a client can visualize how light hits a building at different times of day or how the surrounding environment interacts with the structure.

Example: An architect uploads a static 3D render of a new museum. They use Runway to animate the surrounding trees, moving traffic, and changing weather conditions, providing the client with a realistic video walkthrough that demonstrates the building’s impact on the local urban landscape.

Use Case 3: Autonomous Real-Time Video Brand Ambassadors

Problem: Standard text-based chatbots often feel impersonal and fail to engage customers deeply. On the other hand, hiring live actors for 24/7 video support is financially and logistically impossible for most businesses.

Solution: GWM Avatars allows companies to deploy fully autonomous, real-time video agents. These agents are capable of natural conversation and contextual awareness, providing a "human-like" face to AI interactions.

Example: An e-commerce brand integrates a GWM Avatar into their website to act as a virtual stylist. The avatar "listens" to the customer’s preferences in real-time via a video interface and visually demonstrates how different clothing items might look, offering personalized recommendations through natural, face-to-face conversation.

Use Case 4: Safe Simulation Training for Robotics and Autonomy

Problem: Training robots to handle physical objects or navigate complex environments is dangerous and expensive when done exclusively in the real world. Physical hardware can break, and simulating every possible "edge case" (like a robot dropping a glass) is difficult in traditional programmed simulations.

Solution: GWM Robotics enables developers to simulate physical interactions and robotic behaviors through learned world models. These models predict how actions unfold in the real world, allowing robots to "learn" from millions of simulated physical interactions before ever being deployed in a physical machine.

Example: A logistics company developing a robotic sorting arm uses Runway to simulate how the arm should react to different package weights and textures. They run thousands of "virtual trials" in a simulated warehouse environment to optimize the robot’s grip and speed without risking damage to actual inventory.

Use Case 5: Dynamic World-Building for Game Developers

Problem: Open-world video games require thousands of hours of manual labor to design unique, non-repetitive environments. Small indie developers often have to rely on "procedural generation" which can sometimes feel soulless or repetitive.

Solution: GWM Worlds provides a way to build "infinite explorable realities" in real-time. Developers can use these interactive world models to generate unique, visually consistent environments that respond to player actions dynamically rather than following a pre-written script.

Example: An indie game developer uses GWM Worlds to create a "dream sequence" level where the environment constantly morphs based on the player's movement. Instead of coding every transformation, the AI simulates the world's physics and visual changes on the fly, creating a truly unique experience for every player.

Key Features

- High-fidelity video generation

- Interactive world simulation

- Conversational real-time video agents

- Physical interaction simulation

- Precise video generation control

- Real-time world modeling