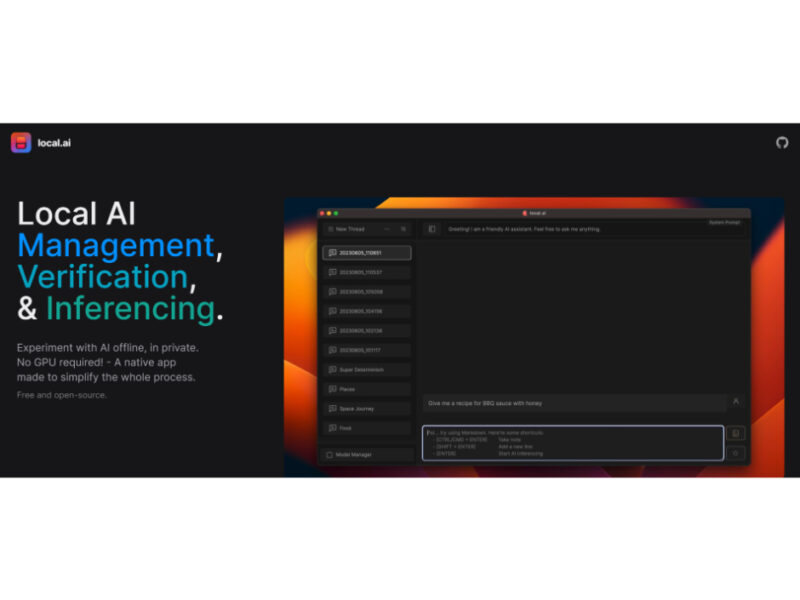

Local AI Playground

Local AI Playground is a groundbreaking native app that simplifies AI experimentation by allowing users to explore AI models locally without requiring complex technical setups or dedicated GPUs. This tool …

About Local AI Playground

Use Cases

Use Case 1: Secure and Private Data Analysis

Problem: Users dealing with sensitive or proprietary data cannot risk uploading information to cloud-based AI providers due to privacy concerns.

Solution: Local AI Playground runs models entirely offline, ensuring that data never leaves the user's local hardware.

Example: A legal professional using a local WizardLM model to summarize confidential case files without an internet connection.

Use Case 2: AI Exploration on Standard Hardware

Problem: Most AI tools require expensive dedicated GPUs, creating a high barrier to entry for students and hobbyists.

Solution: The app leverages CPU inferencing and GGML quantization to allow large language models to run on standard laptops and PCs.

Example: A student running a 7B parameter model on a base-model MacBook Air to learn about prompt engineering.

Use Case 3: Local API for App Development

Problem: Developers need a way to test AI integrations in their applications without incurring API costs or requiring internet access.

Solution: The built-in inferencing server provides a local streaming endpoint that can power third-party apps like window.ai.

Example: A developer building a private note-taking app that uses the local server for automated text summarization and tagging.

Key Features

- CPU-based local model inferencing

- GGML quantization (q4, q5, f16)

- Offline and private operation

- Centralized model management system

- Integrated streaming inferencing server

- BLAKE3 and SHA256 integrity verification

- Memory-efficient Rust-based architecture

- Cross-platform support for Windows, Mac, and Linux